Microsoft acquires twice as many Nvidia AI chips as tech rivals

Microsoft bought twice as many of Nvidia’s flagship chips as any of its largest rivals in the US and China this year, as OpenAI’s biggest investor accelerated its property in artificial intelligence infrastructure.

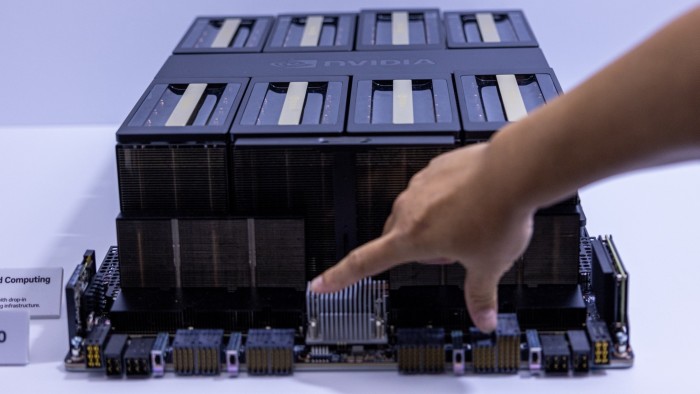

Analysts at Omdia, a technology consultancy, approximate that Microsoft bought 485,000 of Nvidia’s “Hopper” chips this year. That put Microsoft far ahead of Nvidia’s next biggest US customer Meta, which bought 224,000 Hopper chips, as well as its cloud computing rivals Amazon and Google.

With demand outstripping supply of Nvidia’s most advanced graphics processing units for much of the history two years, Microsoft’s chip hoard has given it an edge in the race to construct the next production of AI systems.

This year, large Tech companies have spent tens of billions of dollars on data centres running Nvidia’s latest chips, which have become the hottest goods in Silicon Valley since the debut of ChatGPT two years ago kick-started an unprecedented surge of property in AI.

Microsoft’s Azure cloud infrastructure was used to train OpenAI’s latest o1 model, as they race against a resurgent Google, commence-ups such as Anthropic and Elon Musk’s xAI, and rivals in China for dominance of the next production of computing.

Omdia estimates ByteDance and Tencent each ordered about 230,000 of Nvidia’s chips this year, including the H20 model, a less powerful version of Hopper that was modified to meet US export controls for Chinese customers.

Amazon and Google, which along with Meta are stepping up deployment of their own custom AI chips as an alternative to Nvidia’s, bought 196,000 and 169,000 Hopper chips respectively, the analysts said.

Omdia analyses companies’ publicly disclosed enterprise apportionment spending, server shipments and supply chain intelligence to compute its estimates.

The worth of Nvidia, which is now starting to roll out Hopper’s successor Blackwell, has soared to more than $3tn this year as large Tech companies rush to assemble increasingly large clusters of its GPUs.

However, the distribute’s extraordinary surge has waned in recent months amid concerns about slower growth, competition from large Tech companies’ own custom AI chips and potential disruption to its business in China from Donald Trump’s incoming administration in the US.

ByteDance and Tencent have emerged as two of Nvidia’s biggest customers this year, despite US government restrictions on the capabilities of American AI chips that can be sold in China.

Microsoft, which has invested $13bn in OpenAI, has been the most aggressive of the US large Tech companies in building out data centre infrastructure, both to run its own AI services such as its Copilot assistant and to rent out to customers through its Azure division.

Microsoft’s Nvidia chip orders are more than triple the number of the same production of Nvidia’s AI processors that it purchased in 2023, when Nvidia was racing to scale up production of Hopper following ChatGPT’s breakout achievement.

“excellent data centre infrastructure, they’re very complicated, enterprise apportionment intensive projects,” Alistair Speirs, Microsoft’s elder director of Azure Global Infrastructure, told the monetary Times. “They receive multi-years of planning. And so forecasting where our growth will be with a little bit of buffer is significant.”

Tech companies around the globe will spend an estimated $229bn on servers in 2024, according to Omdia, led by Microsoft’s $31bn in enterprise apportionment outgo and Amazon’s $26bn. The top 10 buyers of data centre infrastructure — which now include relative newcomers xAI and CoreWeave — make up 60 per cent of global property in computing power.

Vlad Galabov, director of cloud and data centre research at Omdia, said some 43 per cent of spending on servers went to Nvidia in 2024.

“Nvidia GPUs claimed a tremendously high distribute of the server capex,” he said. “We’re close to the peak.”

While Nvidia still dominates the AI chip trade, its Silicon Valley rival AMD has been making inroads. Meta bought 173,000 of AMD’s MI300 chips this year, while Microsoft bought 96,000, according to Omdia.

large Tech companies have also stepped up usage of their own AI chips this year, as they try to reduce their reliance on Nvidia. Google, which has for a decade been developing its “tensor processing units”, or TPUs, and Meta, which debuted the first production of its Meta Training and Inference Accelerator chip last year, each deployed about 1.5mn of their own chips.

Amazon, which is investing heavily in its Trainium and Inferentia chips for cloud computing customers, deployed about 1.3mn of those chips this year. Amazon said this month that it plans to construct a recent cluster using hundreds of thousands of its latest Trainium chips for Anthropic, an OpenAI rival in which Amazon has invested $8bn, to train the next production of its AI models.

Microsoft, however, is far earlier in its attempt to construct an AI accelerator to rival Nvidia’s, with only about 200,000 of its Maia chips installed this year.

Speirs said that using Nvidia’s chips still required Microsoft to make significant investments in its own technology to propose a “distinctive” service to customers.

“To construct the AI infrastructure, in our encounter, is not just about having the best chip, it’s also about having the correct storage components, the correct infrastructure, the correct software layer, the correct host management layer, error correction and all these other components to construct that structure,” he said.

Post Comment